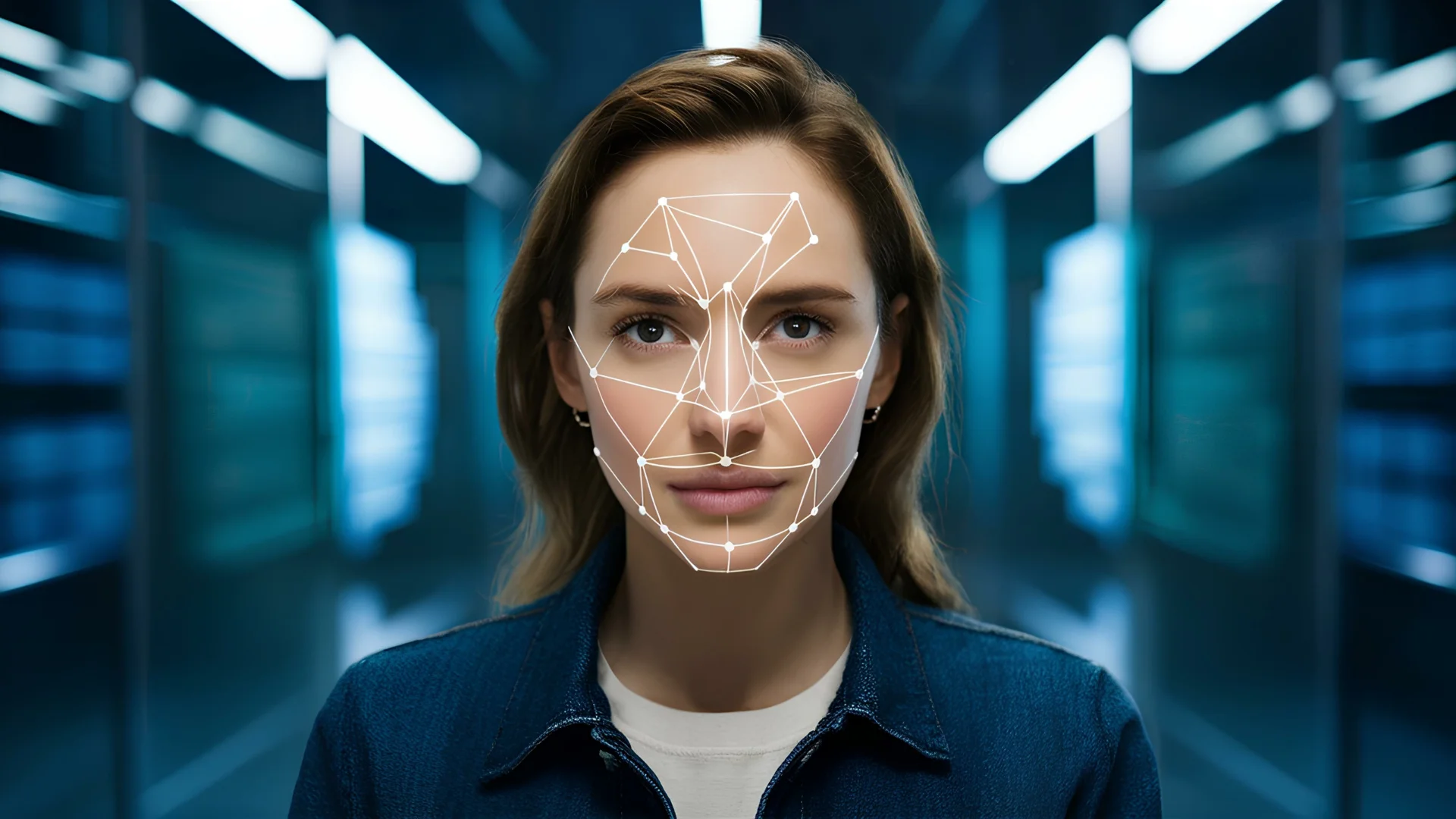

Introduction

Deepfake fraud isn’t a series of isolated scams—it’s an industry. Behind every voice clone or fake onboarding video lies a thriving supply chain that mirrors legitimate SaaS platforms: subscription tiers, customer support, dashboards, even service-level guarantees. This blog takes you inside the evolving ecosystem industrializing synthetic media—and shows where organizations can break the chain before it reaches them.

Key Takeaways

- Deepfake production has evolved from hobbyist experiments to an industrialized market with ready-to-use tools and subscription models.

- Darknet “fraud-as-a-service” operations now offer real-time voice and video deepfakes for as little as $30–$50 per instance.

- Attackers chain deepfakes into multi-channel playbooks—combining AI-generated emails, calls, and video to exploit business workflows.

- Regulations like the EU AI Act (Article 50) and provenance frameworks such as C2PA are redefining disclosure and audit requirements.

- Organizations can “break the chain” through upstream API monitoring, midstream platform hardening, and downstream verification workflows.

Inside the Supply Chain Fueling Synthetic Fraud

Everyone sees the scam. Almost no one sees the factory behind it.

A CFO approves a payment after a “quick call.” A fake video glides through KYC. We treat each incident as a one-off breach. It isn’t. Deepfakes are now industrialized products—sold like SaaS with tiers, dashboards, support, and SLAs. While security teams fight symptoms, the supply chain keeps humming, cheaper and more accessible every month.

If you want to stop deepfake fraud, you have to understand how it’s built, sold, and deployed—and where to break the chain before it reaches your front door.

From Garage Project to Assembly Line

Five years ago, convincing deepfakes required GPUs, expertise, and time. Today? Point → click → generate.

- Open-source hubs ship ready-to-use face swap and voice clone tools.

- Commercial platforms offer real-time video manipulation with Canva-simple UIs.

- Zero-shot voice cloning works from a few seconds of audio (e.g., a LinkedIn clip).

- Darknet menus now list live deepfake services with $30–$50 entry prices—higher tiers add customization, lower latency, and “premium support.”

- Diffusion models replaced older GANs, slashing artifacts and keeping detectors chasing the curve.

Result: Technical barriers collapsed. Quality and speed spiked.

Fraud-as-a-Service Grows Up

Production tools are the raw materials; FaaS is the logistics network.

- Subscriptions & tiers: Basic / Pro / Enterprise, usage caps, support tickets.

- Turnkey “campaigns”: Kits chain voice cloning, session hijacking, and obfuscation—no expertise required.

- Payments & distribution: Privacy coins, gift cards, PayPal variants; vendors compete on quality, turnaround, and CSAT across Telegram/Discord/forums.

- At scale: Industrial scam operations (often forced-labor call centers) adopt AI content generation with multi-language scripts, quotas, and playbooks.

This isn’t niche—it’s an ecosystem.

Deployment: Coordinated, Multichannel Playbooks

Attackers don’t use deepfakes in isolation—they chain tools:

- Executive impersonation / BEC: AI-written email → SMS nudge → “quick call” with cloned voice or live face-swap; urgency seals the deal.

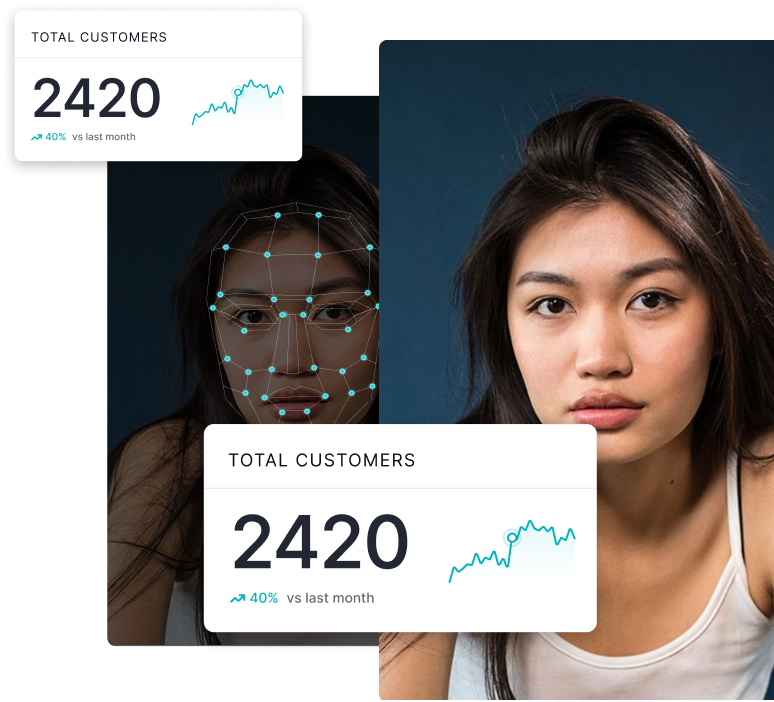

- KYC/AML bypass: Altered IDs + screen injection against liveness + session spoofing; onboarding becomes the attack surface.

- Romance & investment scams: Studio-generated personas, “live” chats, proof-of-life clips on demand; growth rates in some regions are triple digits.

Behind the scenes: LLMs craft pretexts, voice cloning runs in real time, live video overlays span email/chat/phone/video—scripted and scalable.

The Economics Favor Attackers

Create a convincing deepfake for $30; potential payout is five to six figures. Defenders face asymmetry: it’s cheaper to create than to prevent. Regulators are closing the gap:

- EU AI Act (Art. 50): Deepfake disclosure and auditable labeling expectations.

- Provenance standards (C2PA / Content Credentials): Durable metadata for origin/edits/AI involvement—if your stack preserves it.

- Market signal: Enterprises must prove authenticity and maintain auditable workflows for high-risk comms. “We didn’t know” won’t fly.

Where To Break the Chain

You can’t shut down the global factory—but you can choke intersections with your org.

Upstream: Control What Feeds the Factory

- Treat internal voice/video APIs like payment infrastructure.

- Monitor for abuse patterns: rate spikes, anomalous usage, key hygiene.

- KYC your developers (API keys), not just end users.

Midstream: Harden Platforms & Channels

- Push vendors for upload-time and in-session detection in collaboration and contact-center tools.

- Ingest and preserve C2PA/Content Credentials—don’t strip metadata during processing.

Downstream: Verify Before You Trust

- Risk-based step-ups: Out-of-band confirmation for voice/video-initiated payment or credential changes; short holds on irreversible actions.

- Explainable detection at checkpoints: Combine liveness, document forensics, and behavioral signals; demand human-readable reasons for flags.

- Assume forgery in playbooks: Train for “verify via separate channel” as default, not exception.

Intelligence & Response

- Pipe indicators from INTERPOL/ISACs and industry feeds into models.

- Version controls and detections weekly, not annually.

What “Good” Looks Like in 90 Days

Week 1–2: Pilot real-time audio/video detection on one high-risk workflow (vendor banking changes, executive approvals, VIP resets).

Week 3–4: Enable C2PA/Content Credentials across CMS, DAM, social ingestion pipelines.

Week 5–6: Mandate out-of-band verification for any voice/video-initiated payment or credential change—bake it into approvals.

Week 7–8: Instrument everything: log channel, proofs, reason codes; create an audit-ready trail.

Week 9–10: Refresh training to focus on “prove it’s real” (not “spot the fake”).

Week 11–12: Measure outcomes: fewer unauthorized changes, more high-risk interceptions, faster analyst decisions with explainable alerts.

You won’t eliminate the threat—but you’ll become uneconomical to attack.

The Real Problem Isn’t Tech—It’s Efficiency

Creation is cheap. Distribution is organized. Deployment is scripted. The entire operation is an efficiency machine. Awareness training alone can’t beat industrial process.

Sustainable defense means building verification into workflows exactly where synthetic media touches high-risk decisions. Make forgery unprofitable by making it fail reliably—at the interaction layer, with proof over perception.

How DuckDuckGoose AI Helps

DuckDuckGoose integrates explainable deepfake detection directly into KYC, AML, customer support, and approval workflows.

- Real-time, multimodal detection across voice, video, and documents

- Explainable outputs that show why content was flagged (regions, anomalies, manipulation type, confidence)

- Provenance-ready ingestion aligned with EU AI Act and C2PA

- Seamless integration into your existing risk and verification stack—no re-architecture

We turn porous processes into verifiable ones—and make fraud harder than it’s worth.

Learn how we can help secure your workflows →

Break The Fraud Chain

Instrument upstream, midstream, and downstream checkpoints with provenance and explainable detection with DuckDuckGoose

.webp)