Introduction

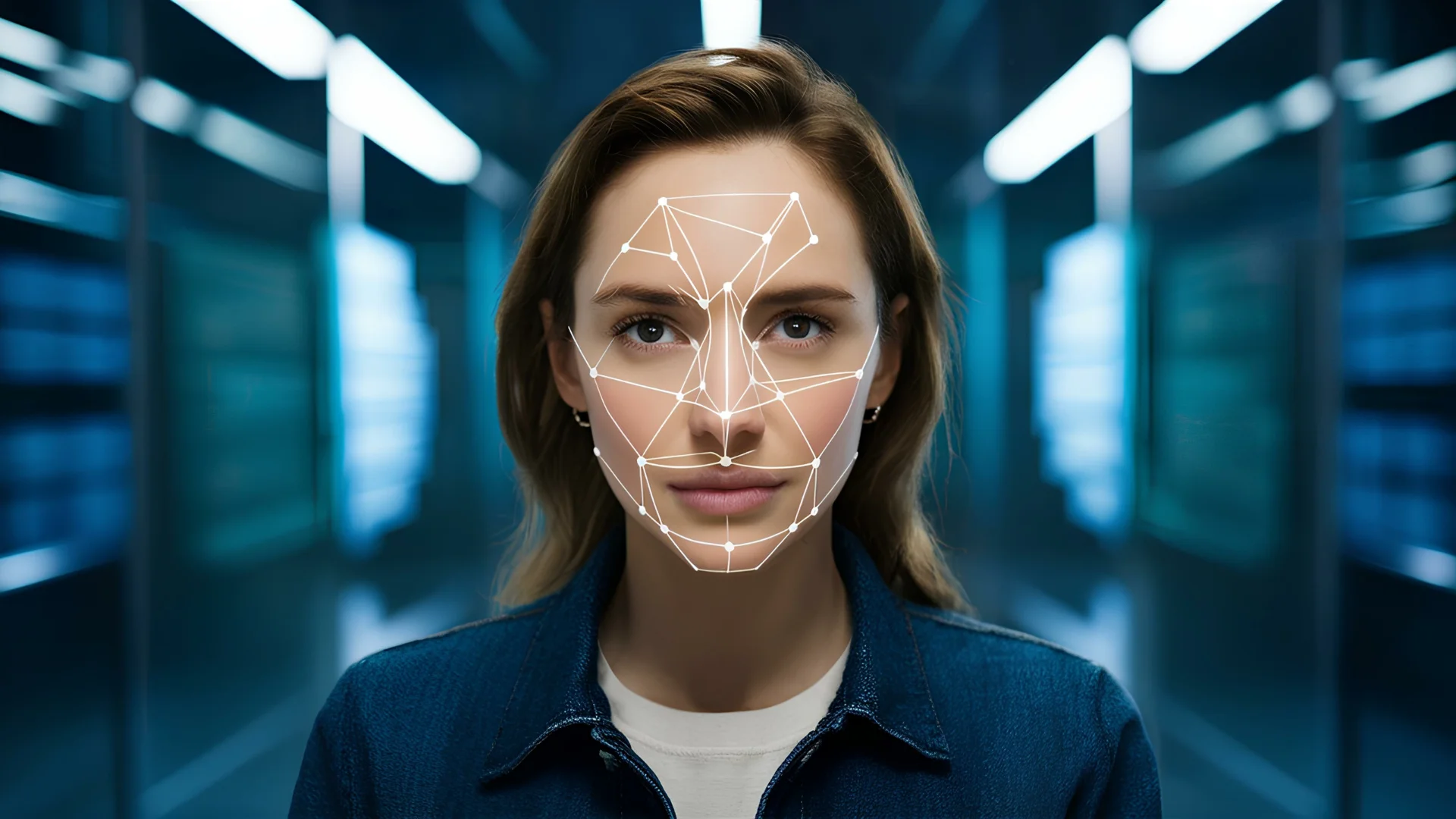

Deepfakes have become one of the defining technological challenges of our time. These AI-generated videos, voices, and images can convincingly imitate real people, making them nearly impossible to detect by sight or sound alone. Fortunately, artificial intelligence is just as powerful at defense as it is at deception. This article breaks down how AI detects deepfakes, why explainability matters, and what the next generation of detection technology looks like.

Key Takeaways

- Deepfake detection uses layered AI analysis across visuals, audio, and liveness to reveal synthetic media.

- Explainable AI (XAI) improves trust by showing exactly why content was flagged as fake.

- Modern detection models reach over 90% accuracy, especially when using multimodal systems.

- Challenges remain, false positives, novel deepfake techniques, and high computational demands.

- The future of detection lies in transparency, integration, and innovation through transformer-based and privacy-preserving AI models.

The Algorithm Says It’s Fake. But Your Analyst Has No Idea Why.

The fraud analyst stares at the screen. An AI system has flagged an account application with 89% probability of being synthetic. She opens the case.

The selfie looks fine. Lighting is normal. Natural blinking. Nothing obviously wrong.

She has 47 more flagged cases to clear before end of shift. The system says this one is suspicious—but won’t say why. Her choices:

- Reject and risk losing a legitimate customer

- Approve and risk letting fraud through

- Hunt for 15 minutes to guess what the algorithm saw (and likely fail)

This plays out hundreds of times a day across financial institutions. The detection is powerful; the explanation is missing. That black box costs teams time, trust, customers, and money.

The Black Box Problem Nobody Talks About

“95% accuracy” is meaningless if your team can’t act on the result.

Flag 10,000 cases/month with no reasons and you’ve created an operational bottleneck:

- Analyst burnout & drag: Reviews stretch from 2 → 15 minutes without guidance. Alert fatigue sets in.

- Untunable false positives: You can’t fix what you can’t see. A recurring lighting artifact can trigger hundreds of false flags—invisible to you.

- Compliance exposure: EU AI Act demands transparency; FinCEN expects documented decision logic. “87% fake” isn’t audit-ready rationale.

The irony: you buy AI to catch more fraud—then hire more people because the AI won’t explain itself.

What “Explainable” Actually Means (And Why It Matters)

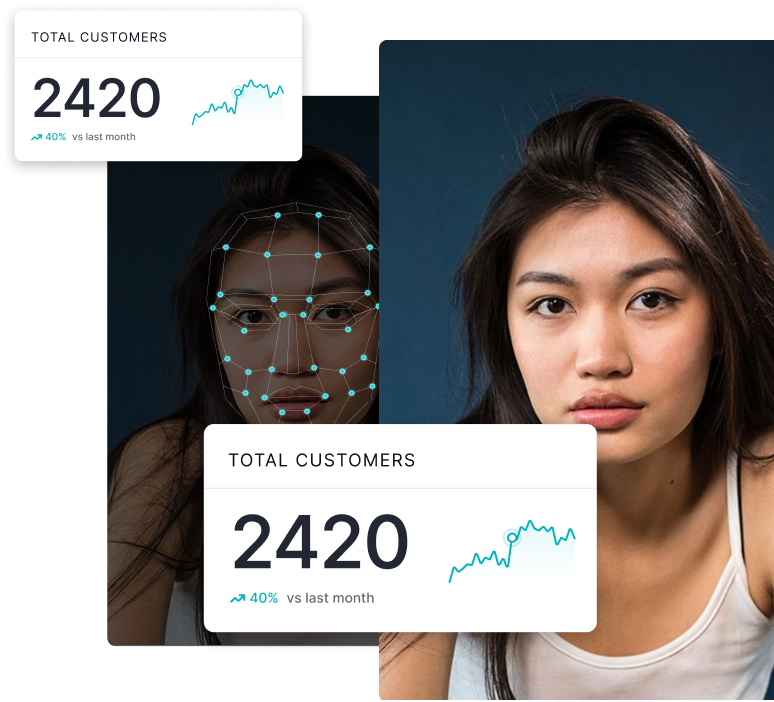

True explainability isn’t dumbing down the math—it’s surfacing signals your team can use:

- Where: Highlight specific regions (e.g., “left cheek lighting inconsistency,” “forehead skin-texture anomaly,” “unnatural nasal shadow geometry”).

- What: Name the manipulation type (face swap, lip-sync, fully synthetic, presentation attack). Responses differ by threat.

- How sure: Show confidence per signal so analysts can weigh strong vs. borderline cues.

- Evidence: Visual overlays, per-region scores, audio spectrogram markers—tangible proof, not just a probability.

Impact: review time drops 15 → 2 minutes. False positives become obvious. New-hire ramp shrinks from weeks to days. And you unlock a feedback loop to improve models faster than any black box can.

Why Most Systems Stay Opaque

Because building explainability is harder than building accuracy:

- Deep nets learn patterns across millions of parameters—not inherently human-readable.

- “Explainability” add-ons often become theater: vague heatmaps with no actionable semantics.

- Some vendors hide logic as proprietary IP, undermining operational value.

- Real interpretability requires architectures designed for it: capturing intermediate signals (lighting, texture, geometry) and surfacing them coherently for analysts.

The Hidden Costs of Black Box Detection

- Manual review overhead: +10 minutes × 2,500 flags/month = 25,000 minutes (~$21k/month, $250k/year) in avoidable spend.

- Conversion loss from false positives: Even 1% at scale rejects hundreds of good customers. With $500 CLV and 10% abandonment, you’re leaking ~$150k/year.

- Compliance risk: Unexplained rejections are audit time bombs.

Sum it up and the black box can cost more than it saves—even when raw accuracy is “good.”

What Actually Works: Accuracy × Speed × Explainability

Enterprise-scale detection only works when all three are true at once:

- Accuracy: Real-world (not just lab) 95%+ with <0.5% false positives—or fraud gets through, or customers get blocked.

- Speed: Sub-second so verification is invisible; slow checks kill UX and conversion.

- Explainability: Human-readable reasons so teams can tune, train, document, and improve. Without it, you’re flying blind.

Anything less introduces friction, cost, or risk that erodes ROI.

Beyond “Accuracy %”: Evaluate What Matters

Ask vendors:

- Can analysts see what was detected and where? Scores alone = black box.

- Does it catch novel deepfake types? Show generalization beyond training data.

- What’s the false-positive rate at your volumes? 1% of 100k = 1,000 bad flags.

- What’s the real production latency? Demos aren’t device-diverse reality.

- Can non-PhDs act on outputs? If not, it won’t scale in operations.

- Is the model adaptive to your fraud patterns? Static models stale fast.

These answers predict whether the tech reduces or adds workload.

The Regulatory Pressure Is Building

- EU AI Act: Biometric verification = high-risk → transparency required.

- FinCEN: Expects documented controls and decision processes.

- MAS (Singapore): Validated, tested AI—explainability is implied.

Opaque detection is a compliance liability in waiting.

When Detection Actually Works

- Analyst productivity: Review times down ~80%; false positives fall with targeted tuning; trust in the system rises.

- Financial impact: ROI in year one via fewer manual hours, more fraud caught, fewer lost customers, clean audits.

- No security vs. UX trade-off: High-accuracy, explainable, sub-second = secure and seamless.

The Real Competitive Advantage

Deepfake generation is cheap; “detection” is commodity talk. Operationalizable detection—the kind your team can see, trust, and act on—is the edge.

Most firms have tools that catch deepfakes but can’t explain them, creating a slow, expensive, brittle process. Leaders insist on explainability as a first-class feature, not a slide in a demo.

Because when you verify hundreds of thousands of identities a month, “the algorithm says it’s fake” is useless. You need to know why, where, and what to do next.

How DuckDuckGoose AI Solves the Explainability Problem

We built our platform because black box detection was accurate—but unusable in production.

What’s Different About Our Approach

- Explainability by design

Every flag ships with visual evidence: which regions, which anomalies, which manipulation type, and per-signal confidence. Analysts see reasons, not just scores. - Actionable reviews

Teams cut review time by ~80% because they go straight to the evidence instead of hunting for it. - Production-grade accuracy

95–99% detection accuracy with <0.1% false positives in real-world conditions—including novel deepfake techniques. - Real-time, invisible UX

Sub-second analysis keeps legitimate users flowing while fraud is stopped before it enters your system. - Adaptive models

Continuous learning from emerging attack patterns keeps detection ahead of adversaries. - Compliance-ready records

Each decision includes auditable reasoning aligned with EU AI Act, FinCEN, and financial-sector guidance.

Bottom line: Not just better detection—detection your team can operationalize.

Make AI Explain It

Give analysts evidence and confidence scores—not just a number—so they can act fast.

.webp)